Why Your Engineering Teams Need to Rethink Code Review in the Age of AI

Good Code Isn’t Enough—We Need to Review the Thinking Behind It

tl;dr

Code review is no longer just about correctness—it’s about reasoning and relevance.

AI generates more code, but humans must judge fit, intent, and impact.

New risks: plausible but flawed logic, fragmented styles, security gaps, and tool noise.

Reviewers must shift from syntax to systems thinking and business alignment.

Let AI handle the code; humans review the thinking behind it.

The Shift

As a software architect who has spent years immersed in the rhythms of development teams, I’ve seen how the traditional code review process has anchored engineering culture—ensuring quality, building shared understanding, and mentoring developers. But over the past few years, with tools like GitHub Copilot, Claude Code, and Cursor entering the mainstream, that rhythm has shifted in a way that challenges every assumption we’ve had about development workflows.

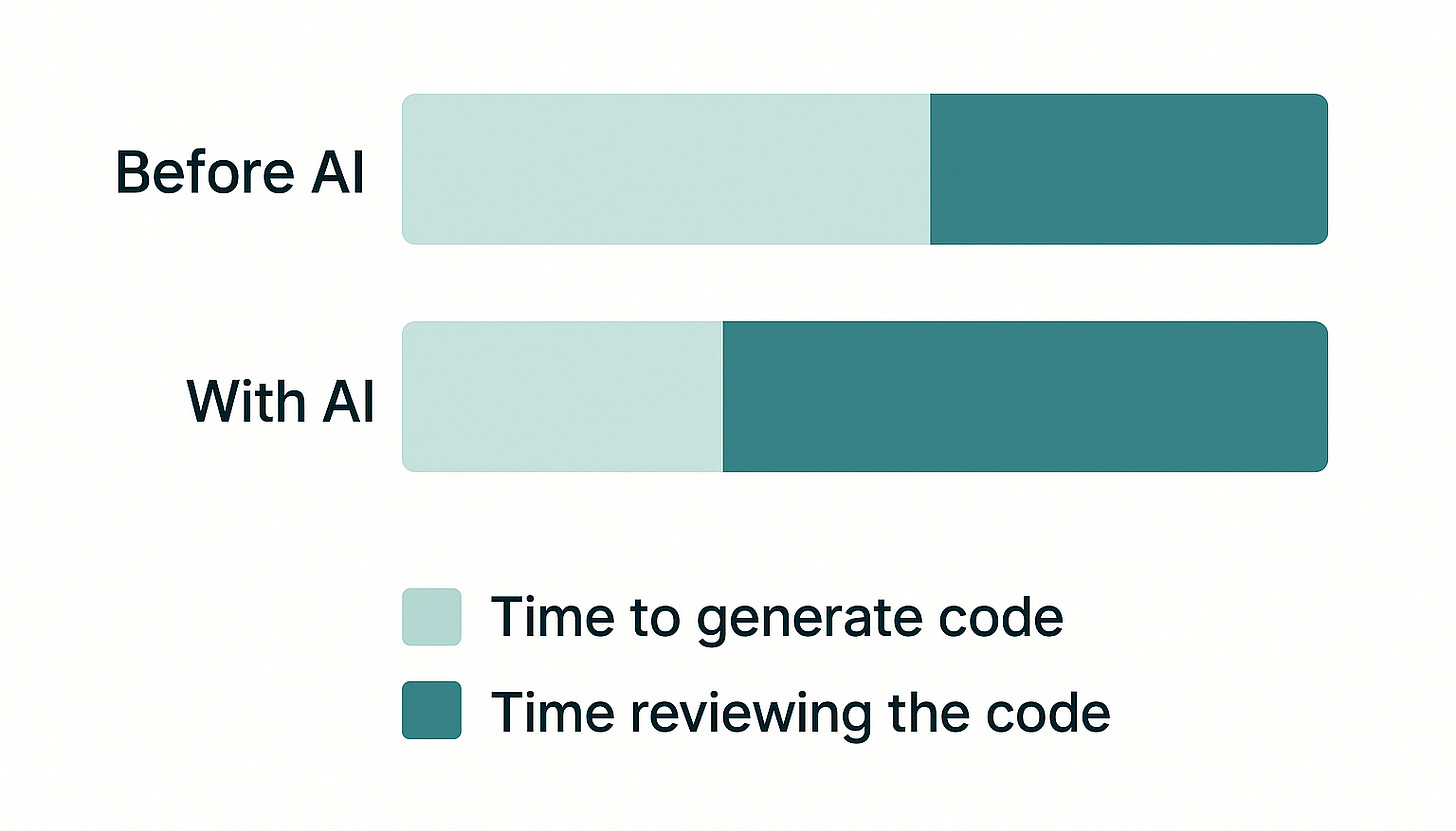

Codebases are growing faster than ever. Teams no longer struggle with a lack of output—instead, they’re buried in pull requests. This surge creates a mismatch between code generation speed and review capacity. More importantly, we’re not just reviewing what someone wrote—we’re often reviewing what an AI wrote for someone. That means we can’t stop at “Is this correct?” We need to ask, “Is this the right idea for this solution?” and “Does this code serve the product and business strategy it’s meant to enable?”

Code Review is Evolving

In the traditional sense, code review was primarily a checkpoint—focused on syntax, logic, style, and catching bugs before merge. It ensured code conformed to team standards and passed the eye test of a peer. Today, code review is no longer just about catching bugs or enforcing conventions. It’s about understanding the thinking behind the code. The real work is shifting from checking lines to uncovering logic—why a particular design was chosen, how it fits the broader architecture, and whether it anticipates the right kinds of change.

AI now sits alongside us in two roles: writing code and reviewing it. As a co-coder, it handles the tedious scaffolding and even proposes architectural patterns. As a co-reviewer, AI assists with a growing range of tasks: it flags issues, enforces code style, suggests improvements, and increasingly, drafts pull request comments with contextual explanations. These capabilities are expanding the scope of what automated tools can do—not just spotting defects, but initiating review conversations. By handling repetitive and surface-level checks, AI allows human reviewers to focus their attention where it matters most: system coherence, architectural intent, and the nuanced interplay between code and product strategy.

We also need to recognize that these AI tools come with their own embedded assumptions and biases. They’re trained on vast datasets that don’t reflect our unique team norms, product architecture, or organizational risk tolerance. That makes the act of review more critical.

New Challenges in Code Review

While AI tools assist with review, they also introduce new challenges when the code under review is AI-generated. Developers are now often reviewing code that no human wrote by hand, which can be a strange new experience.

1. Code That Looks Good But Isn’t Quite Right

AI-generated code can be syntactically clean and logically structured—but subtly wrong. It may misrepresent domain logic or cut corners on integrity. These issues don’t surface in syntax—they hide in intent. And they often bypass static analysis tools or test suites, especially when the edge cases are tied to business logic that the AI can't fully grasp.

2. Style and Fragmentation

Every developer prompts AI a little differently. The result? A codebase filled with inconsistent abstractions, varying naming conventions, and different problem-solving idioms. It’s harder to read, harder to maintain, and definitely harder to review. In larger teams, this leads to a loss of cohesion and a breakdown in readability norms that used to be quietly enforced through code reviews.

3. Invisible Risks: Security and Compliance

LLMs pull from vast public datasets. Sometimes they bring in outdated libraries, deprecated methods, or insecure packages. Reviewers have to be vigilant—not just about the code, but about what it brings in. In regulated industries, this introduces serious audit and compliance risks. In the worst case, AI can introduce subtle vulnerabilities that look entirely normal during a quick review.

4. Developer Understanding Gets Murky

When code is largely AI-generated, how do you assess whether a developer actually understands it? As reviewers, we’re left guessing: Did they prompt this code, or do they own it? This matters deeply for mentoring, understanding, and trusting someone’s judgment in review decisions.

5. Tool Overload and Contradictions

Today’s code review ecosystem is crowded with overlapping signals: linters flag formatting issues, CI/CD tools enforce constraints, AI models inject comments and suggestions, and peer reviewers bring subjective perspectives. The result is a tangle of sometimes redundant, sometimes contradictory feedback. Developers are left navigating a noisy blend of warnings, suggestions, and best practices, unsure which ones truly matter. Reviewers, in turn, must act as curators—filtering what’s valuable and contextualizing conflicting inputs. Without clear prioritization, this overload can dilute focus, erode trust in tooling, and lead to inconsistent review outcomes across the team.

6. Reviewer Fatigue

More code, more reviews, more mental strain. And because the stakes are higher —reviewing AI-assisted code can actually take more energy, not less. Over time, this can erode the team’s ability to sustain high-quality discussions, especially when volume pressures grow.

7. Business-Logic Blind Spots

AI is excellent at surfacing code snippets that solve technical problems. But it doesn’t know your roadmap, your customer personas, or your SLAs. That means reviewers now carry the additional responsibility of interpreting whether AI-generated code respects the business logic that governs your product decisions.

What We Should Really Be Reviewing

We need to reframe the review process around reasoning rather than syntax, and shift from a mindset of gatekeeping to one of shared architectural and business stewardship. This is especially crucial now, when AI-generated code often presents surface-level plausibility while masking subtle design flaws or strategic misalignments. The challenge isn’t just in spotting bugs or validating features—it’s in assessing whether a solution genuinely serves the product, users, and organization.

To do this well, we must consider the larger system of intent that surrounds any code change. That means asking: What problem is this solving? Why is this the right solution here, in this system, at this time? What trade-offs were made—performance versus maintainability, time-to-market versus long-term flexibility? Does the code harmonize with the surrounding architectural patterns? More importantly, does it align with business priorities such as roadmap milestones, technical debt tolerance, customer impact, or compliance risk?

AI excels at scanning for low-level issues: missing null checks, error handling, inconsistent styles, deprecated methods. It can generate and enforce many of the mechanical parts of software development at scale. But it has no intuition for trade-offs, no understanding of what matters most to this business, in this sprint, for these users. That higher-order reasoning—the kind that balances architecture with adaptability, or technical constraints with strategic direction—remains a human strength. Reviewers must now move up the stack: from syntax to systems thinking, from catching flaws to evaluating fitness.

In this sense, the code review becomes a junction where technical reasoning meets product vision. It is not enough to verify correctness; we must interrogate relevance. We need to assess whether the reasoning behind the code reflects sound judgment under the constraints the team faces—from sprint deadlines to SLA requirements to strategic pivots. It becomes a conversation about alignment: of code to system, of system to purpose, and of purpose to intent.

When teams adopt this lens, reviews stop being transactional rituals and start becoming vehicles for building shared understanding. They become a strategic function where engineering, product, and business logic intersect—an essential checkpoint not just for merging code, but for converging goal

How We Can Make This Work

Beyond review mechanics, teams should cultivate new heuristics and mindsets for reviewers to effectively evaluate the reasoning and intent behind code (especially AI-written code). Here are some practical tips and checklists for reviewers focused on the “thinking” aspect:

1. Rethink Review Culture

Don’t just verify what the code does—probe into why it’s done that way. If the PR doesn’t have a clear rationale, ask the author to explain their reasoning (and if the author is effectively the AI, this exercise forces the human to ensure they understand it too). For instance: “Why did we choose this data structure? Did we consider alternatives?” or “What scenario is this error handling for—have we documented that requirement?” These questions uncover whether the developer (or AI) thought through the design and reinforce the idea that passing tests isn’t enough—the solution must make sense in context.

2. Focus on intent and impact, not details

With AI handling details, human reviewers should intentionally elevate their perspective. Structure your review comments around higher-level concerns: “Does this code meet the acceptance criteria of the story? Will this change have any downstream effects on module X? Is there a scenario where this breaks? Is the approach consistent with our architecture?” A human reviewer should devote less attention to style (assuming linter/AI took care of it) and more to things like: is the new public API well-designed and necessary? Are we handling all error conditions and rollback if needed?

3. Use systematic checklists for AI-specific pitfalls

It can help to have a checklist for reviewing AI-generated code, to remind reviewers of common weaknesses to look for. Based on collective experience, such a checklist could include:

Edge Cases Covered?

Security Concerns?

Performance and Scaling?

Consistency with Project Patterns?

Clarity and Maintainability

Tests Present and Valid?

By going through such a checklist, reviewers systematically evaluate the “thinking” behind the code from multiple angles, mitigating the risk of oversights. Some teams even bake these checklists into the AI’s output – e.g. prompting the AI to suggest potential edge cases or to explain how it ensures security – which the human can then verify.

4. AI-Generated Code Contributions

If a PR itself is known to be largely AI-generated (e.g. a developer used an AI to create a new module), consider having a more formal review process. Some companies require that any AI-generated code be indicated in the PR description. The review might then involve an extra step where the author and reviewer do a walkthrough together to ensure understanding. The team playbook may say: “If more than 50% of a PR is AI-generated, assign an extra reviewer or do a team code walk-through before merge.” This ensures that AI-written code doesn’t slip in without at least two pairs of human eyes agreeing on it. It’s also a training opportunity – less experienced devs can learn during the review why the AI’s approach is good or bad.

5. Leverage AI to assist the reviewer

Finally, don’t forget that reviewers themselves can use AI as a sidekick. For instance, if you’re confronted with a large AI-written module, you could ask an AI to summarize the code’s function or to generate potential test cases. OpenAI’s research found that a model like CriticGPT can help human reviewers spot significantly more issues in AI-written code than they would alone [1].

The Upside of Reviewing Thoughtfully

When we focus on reviewing the thinking behind the code, here’s what we gain:

Better Use of Time: Let AI handle formatting and linting—we focus on architecture, design, and impact.

Stronger Teams: Reviews become conversations about trade-offs and intent—not just pointing out mistakes.

Better Systems: When code is reviewed for thinking, not just correctness, we build systems that age better.

Faster Learning: Developers learn more when we explain reasoning, not just enforce rules.

Greater Product Alignment: When reviews consider business context, code becomes an active driver of product and business outcomes, not just an implementation artifact.

Resilient Culture: Over time, a reasoning-first review culture reinforces architectural literacy and shared ownership, reducing handoff costs and fragility.

Conclusion: Review Thinking, Not Just Code

As an architect, I’ve come to believe that: “Code review is no longer just reviewing code—we should now be reviewing the thinking behind the code.” In an AI-assisted world, the mechanical parts of code review are increasingly handled by tools, freeing human developers to review reasoning.

AI has made generating code easy. And reviewing it, to some extent. But understanding, trusting, and evolving that code? That still requires a human-in-the-loop.

Bottom line: The practice of code review is evolving—from a line-by-line gatekeeping exercise into a conceptual, mentorship-driven, high-level evaluation of system fitness and alignment. Teams that embrace this shift, leveraging AI for efficiency while focusing human energy on why the code exists—will build better software faster, with greater cohesion, clarity and better systems for the long haul.

References:

Finding GPT-4’s mistakes with GPT-4 - https://openai.com/index/finding-gpt4s-mistakes-with-gpt-4/